This is going to be quite a generic set of considerations when coding for performance in .net and as with anything performance related, this isn’t a silver bullet approach!

There are many factors that affect code performance and the below advice could be the complete opposite of what you should do in other situations. If you decide you need better performance from your code, it’s probably a good idea to investigate exactly what your specific bottlenecks are using tools such as dotTrace and trying out variations on your approach to remove the specific bottlenecks.

When do I need to think about performance?

Since coding for performance can sometimes reduce readability or simplicity of the code, there needs to be a reason behind doing it. The two main drivers I have found are “time” and “money” (of course!) which can translate into:

- Time is money

- Research shows that slow website speeds affect customer conversions and SEO, so introducing any slowness into the UX can affect sales.

- If the software is not customer facing, it could be that staff have to wait around for things to complete. Collectively businesses could be losing hours of time waiting for things to happen that could be completing faster.

- Time is time

- Sometimes, you just need to get things done in a certain amount of time.

- For example, if you receive updates from another system every 15 mins, then you have 15 mins to complete the processing so that the system is ready for the next receive.

- Another example can be things like "overnight jobs", where code is scheduled to run out of peak hours, they need to complete in a certain amount of time to get everything done before peak trading hours start.

- Finally for cloud architectures, things like Lambda functions and API Gateways have a pre-defined maximum run time before they are automatically shut down or timed out.

- Money is money

- Performance tweaks often come by being less wasteful in the code - optimising code to be less wasteful usually results in:

- More “mechanical sympathy” - so you can achieve the same results with less intensive CPU and memory requirements, reducing infrastructure or cloud costs.

- If all systems are more efficient, your baseline utilisation and therefore costs will be reduced and the system will have more spare capacity to deal with bursts of activity with less horizontal scaling required.

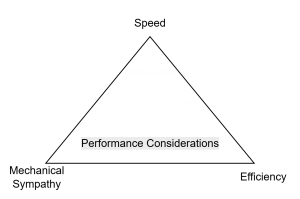

One should always be mindful of performance to avoid obvious pitfalls, especially when better performance can be achieved without impacting readability. Even when not building “time sensitive” functionality, considering “efficiency” can still impact on direct and indirect costs (such as server capacity, cloud costs and wasted time). However, one must also factor in the impact on external systems and other users of the system in question when deciding how aggressive code should be.

Type of Performance Bottleneck and How to Fix

Generally speaking, the activity of a piece of code is either “

CPU bound” or “

IO bound”. That is to say some code is limited by its own internal processing power, and some because it’s waiting for external resources (such as other APIs, databases, disk etc.)

The other main cause of performance issues is usually memory constraints, which can manifest in various ways - from “Out of Memory” exceptions, slowness caused by paging or, at the very least, impossibly high system requirements.

The “type” of performance issue, in this sense, will affect how you resolve the issue. However they are all ultimately constrained by an “optimal utilisation” (i.e. balancing speed against system overload).

The trick to performance optimisation is therefore to:

- Avoid “hot path” time spent doing “nothing”

- If you spend most of your time waiting (e.g. on IO or in-memory locks) , then you need to reduce that time first - even if that means improving the performance of a dependency first.

- Make the most of CPU time you are given

- Choose your data structures and algorithms wisely to avoid wasted CPU cycles.

- Avoid doing the same thing multiple times.

- Don’t starve the resources for everything else.

- Always bear in mind that most systems are not single-user, so don’t design the code to completely saturate everything for a single caller.

- Similarly make the best use of available memory, without wasting it

Below I will further describe the two types of activity, plus memory constraints, and what considerations can be factored in when looking at to address performance issues:

IO Bound Activity

Waiting for external resources is sometimes unavoidable in certain architectures. Things like calling external APIs, reading/writing to disk, loading data from databases or out of process caches etc.

Usually, in dotnet, these calls will at least be using “async/await” syntax (If they’re not, then they probably should be!). Which means that the “time wasted” waiting for an external dependency can be utilised by another part of the code which has CPU bound activity. However, in most cases that doesn’t really reduce the time taken for the “current call”, but instead frees up threads/CPU for “other calls” that are happening in parallel. (Not forgetting that ASP.NET is naturally “multi-threaded” due to it dealing with multiple concurrent requests).

When dealing with IO Bound slowness, things to consider are:

- Improve the source of the slowness

- You might be able to speed up an external API and reap the benefits in your code

- Code changes aside, could the slow API use transport level caching to avoid repeated slowness caused by load.

- You could add data layer indices to speed up query performance

- Consider persistent query models which are “ready to consume” (to avoid runtime complex joining, filtering and aggregating) - this is similar to “caching” but is usually maintained outside of the target code and out of process.

- Pre-loading and caching:

- Where possible (which it’s not always), avoid making “live calls” to external dependencies on your “hot path”.

- When using caches, you’ll need to consider:

- Startup times will be longer

- The memory requirement of the system will increase

- Caches can be stale, so need an update mechanism

- Only load what you need

- If you can’t preload and must load data specific to a request then make sure you limit the loaded data as such.

- Only call APIs/load data that you actually need

- Only load a subset of data from the data store using data-layer predicates (i.e. filter data at source, not in memory).

- Similarly, design your aggregates such that you can load them in a performant way (e.g. if you have a parent/child relationship where you don’t always need the children in-memory then break them apart in the object model).

- Avoid daisy-chaining:

- If you’re making more than one request to one or more external dependencies try doing it in parallel rather than one after another.

- In dotnet this would usually mean not immediately awaiting a task, but instead firing off multiple tasks concurrently for example using TPL or awaiting multiple tasks with “await Task.WhenAll"

- Avoid overuse of parallelism

- In direct contrast to the above, you can't always just “fan out” your tasks and await them all, because:

- The external dependency might get slower under pressure, meaning you wait longer overall

- The external dependency might fail more frequently, leading to retries

- You might be rate limited by the external dependency, so you’re not actually getting any more throughput

- The amount of context switching on the local CPU to handle hundreds of concurrent tasks might be counterproductive.

- You might starve the system of resources (ports, bandwidth, threads etc.) which affects ASP.NET’s ability to serve other requests.

- In these cases, it can be better to “chunk” the workload into batches of concurrency that don’t overload the dependency or the local machine with context switching and bandwidth.

- The "size" of each chunk is something you can play around with to get the optimal performance

- Try to cleverly “interleave” the CPU bound and IO bound activity

- For example, instead of doing:

- Step 1 - do a bunch of number crunching on the CPU

- Step 2 - make a request to an external dependency

- Step 3 - await dependency

- See if your logic can work like:

- Step 1 - make a request to an external dependency

- Step 2 - do a bunch of number crunching on the CPU

- Step 3 - await dependency

- Don’t wait for IO completion on the hot path

- This needs careful consideration, as you may need to check for errors etc. but in some cases (for example writing telemetry data) - maybe you’re end user doesn’t really need to wait around.

- However, you can’t just “not await a task”

- ASP.NET does not guarantee completion of tasks after the main request has completed.

- You’d need to queue a background work item, or hand over to a background service e.g. using channels for non-blocking message processing.

- If you have lots of unrelated “after the fact” activity that needs to take place, consider a different architectural pattern such as fan-out event notifications.

When you’re looking at a performance trace, if you see lots of time spent in “wait” methods, or methods with a lot of “system time” and not a lot of “user time” then probably you were waiting on some un-managed resource (in some cases that could still be CPU bound, but in most cases the OS is waiting for hardware like network cards, disks, memory etc.).

Another thing to look out for, which is semi-related to IO bound activity but is actually CPU bound are things like deserialization or iterators like “MoveNext” which show up when HttpClients or ORMs are mapping the response back into dotnet objects. Since the code for that is outside of your control, you can sometimes consider it as part of the IO bound remit and address it using the above (i.e. load less where possible).

CPU Bound Activity

CPU bound activity is essentially all the activity within your code where you’re “processing” something - you’re not waiting on any other system at this point, you have the data in memory and simply need to “do something” with it.

This element of performance gets a bit more interesting and nuanced as there numerous factors and constraints that will determine how code will perform. For example the maximum capacity of the hardware, the expected utilisation of the hardware, the physical architecture/layout of the hardware not to mention limitations and changes of the framework and OS. Not that these things can’t also affect performance of IO bound activity, but they tend to factor in more here when benchmarking performance approaches.

Dealing with CPU Bound performance issues requires more diligent thought, but below are a few of the considerations you can factor in:

- Look for a better way of doing it

- Before you get into the micro-optimisations of some other coding choices, really think through the approach and see if there’s a more efficient way of doing it

- Do research on different algorithms and approaches to solving similar problems and look for something more performant.

- A good way to spot this type of optimisation is to step through the code and follow what it’s doing - you should notice areas where there’s wasted computation, or expensive operations that could be avoided.

- Think about any mathematical patterns that emerge from what might currently be a “brute force” approach.

- Push querying/aggregating large datasets out to the data layer

- If the code is performing looping/filtering (remember that LINQ with in-memory collections, is essentially a loop) on a large dataset, see if that filtering/aggregating can be pushed down into the data layer, such as SQL.

- Be mindful of “LINQ” (to objects) in general - it can sometimes look very clean and readable, but under the hood each extension method is essentially another loop of the data using enumerators!

- Pre-calculate as much as possible “outside” of the hot path.

- This can be things like re-structuring data, indexing etc. so that lookups and calculations are fast

- Pick the right data structures/types

- Use things like HashSet and Dictionary to pre-organise your data into something that’s fast to lookup.

- This is better if it can be built outside of the hot path, as there is time/CPU associated with putting things into a dictionary or hash.

- For reference types as a key, you’ll probably want to create “value type semantics” by overriding “GetHashCode” (and Equals), so make sure your GetHashCode function is fast too. Dotnet has built-in methods (HashCode.Combine) for quickly generating hash codes based on scalar values you pass it, so use it!

- Be careful when writing GetHashCode logic for mutable types, you should not use values that will change (or it ends up in the wrong part of the dictionary/hashset).

- Using a “record” will essentially create the above for you, but with little control over what’s included.

- Use “IReadOnlyList” backed by an Array for returning immutable collections. This is better than IEnumerable or IReadOnlyCollection because it directly exposes the “Count” property without requiring enumerators.

- Use “arrays” instead of “lists” when you have a known size (or at least initialise the list capacity) - it saves on memory re-allocation.

- Don’t use “Concurrent” collections unless you really need thread safety (they have built-in locking that slows them down).

- Compare using “class”, “record class”, “struct”, “record struct”, “ref struct” etc. as your type definitions for any data that is (or isn’t) passed around frequently

- Aside from heap vs stack allocation, between value types and reference types, there can be other subtle differences between all the options in how they are mutated (if at all) and how they are compared

- Calling ctors in a tight loop can lead to performance issues as it requires more and more heap allocations.

- Try adding a “sealed” keyword which can improve performance though devirtualisation of calls.

- Don’t enumerate collections multiple times

- Especially with an IEnumerable, looping a collection multiple times can lead to multiple evaluations of the source.

- Try to “get it all done” inside one iteration of the loop

- This can sometimes be tricky when trying to encapsulate looping logic into downstream classes

- If you really need to iterate it twice, ensure the IEnumerable is only evaluated once (e.g. try using “.ToArray()” on an IQueryable).

- Use “.Count” or “.Length” properties, not the extension methods (like .Count() or .Any()) which may incur enumeration overhead, depending on the type.

- Enumerate using indices/materialized collections, not iterators

- When you know the extent of the collection, it can sometimes be faster to loop over using a “for” loop (and indexer), or "foreach" with a fast struct enumerator, rather than using “foreach” against an IEnumerable interface.

- Except where optimized by the collection type (or by the dotnet compiler) “foreach” can sometimes lead to heap allocations, or be generally "slower" due to the indirection.

- If you’re writing your own collection class, ensure you create a fast indexer method and/or fast struct based enumerators (NB. some .net data types cannot be indexed).

- Beware the Garbage Collector!

- Allocating objects on the heap at some point means that memory needs to be garbage collected

- The garbage collector can cause your entire process to pause, so needs to be factored in

- This is one of the reasons why using "for" instead of “foreach” can be better as a general rule as it guarantees you're not unknowingly creating heap allocations in your loops.

- Have a read of the Microsoft docs Garbage Collection and Performance - .NET | Microsoft Learn

- Parallelise the code

- Be careful with this one, although you’ll get more throughput for the “current call” you’ll risk saturating the CPU at the expense of all the other concurrent calls. Couple that with concurrent callers all spinning up parallel tasks/threads the CPU will spend more time context switching than doing anything useful.

- This is more useful in situations where you’ll have very low concurrent users (or it's a single user app)

- Be careful of badly written concurrent code

- That constantly locks - if you’re writing concurrent code, use the most light weight locking patterns possible (Semaphore slim, Interlocked class etc. - or try to use the built-in concurrent types where possible like ConcurrentDictionary etc. rather than doing your own locking).

- Stick to a reasonable number of concurrent operations - Use the built-in TPL classes, channels, Parallel.ForEachAsync etc. to keep concurrency bounded.

- When partitioning data from a larger collection - use memory slices (e.g. ReadOnlyMemory / Span) to avoid copying and re-allocating.

- Don’t block threads from async code - async Tasks are not guaranteed to be the only thing running on a given thread.

- Consider using Lazy or Non-Lazy loading (whichever makes the most sense)

- Lazy loading can be useful if not all code paths lead to requiring something (whether that’s entities from a DB, or some other dependency via the Lazy class)

- Eager loading can sometimes work better when you can request all data in a single call, rather than having lots of small calls to the DB.

- This overlaps somewhat with IO Bound activity, as a lot of the “processing” is being spent on chatty wasteful data loads.

- Use source generators with System.Text.Json to improve JSON serialization speeds.

- DI container slowness, especially if you resolve dependencies in a loop

- Try not using the DI container to resolve dependencies on the hot code path (If you see a lot of time being spent resolving dependencies, it's worth a shot at using poor man's injection)

- More easily achievable in the centre of an onion architecture where there’s no external dependencies (i.e. the code is split up only for SOLID principles)

- Short circuiting and bounds checking

- If you have a collection of items that need to be “matched” against different inputs, try pre-computing the “bounds” of the collection. If an input is out of bounds, then it won’t match any item.

- C# tends to be short-circuited anyway, so try to put your “easier” comparison in front of the “harder” ones

- E.g. “if (somethingQuick && somethingSlow())” is better than “if (somethingSlow() && somethingQuick”, at least when “somethingQuick” is false.

- Be aware of “where you are” in the code - for example certain parts of the code may be being called upstream inside of a loop, so small optimisations (or mistakes) can have a bigger impact.

The list of things to consider when tuning code for performance goes on and on, and the effect can be drastically different depending on your specific use case. Also, dotnet itself is constantly evolving, to remove old bottlenecks, optimize during compilation etc. So performance should be re-evaluated with every version bump.

Memory Issues

If your application is using too much memory it can lead to unrealistic minimum system requirements, or when running on insufficient equipment can lead to slowness (caused by the OS paging memory to disk) or exceptions (such as “Out of Memory” exception).

There are a couple of things you can do code-wise to try to combat “wasteful” memory allocations, such as:

- Be careful of “strings”

- Try to avoid mutating strings. Strings are actually immutable even though syntactically you don't see warnings for mutating them (they behave more like a record “with” statement and will create a new instance with every change).

- Instead of mutating a string, try to define it once using string interpolation, or if you’re building it up over many lines of code, use a “StringBuilder”.

- Try “string interning” for long lived string data - this is provided by dotnet as a way of de-duplicating strings that have the same value (since they’re immutable, they can all point to the same instance)

- This can be helpful, for example, when you’re consuming a lot of de-normalised data to store in memory, where lots of objects would have the same value repeated over and over.

- However it should be tested to see if it makes things better or worse (for example on short lived data this can make things worse due to more allocations)

- Avoid duplicating objects (or parts of objects) unnecessarily

- Check whether any or all of your object could use a singleton or static pattern to reduce the number of instances of certain parts of the data.

- Again, immutability is helpful here as everyone can share a reference to an immutable object without concern but even mutable data can be shared if coded to be safely mutated.

- It doesn’t have to be everything, maybe “some” parts of the objects could be static/singleton.

- For local/short lived variables consider using types/annotations (struct, stackalloc) that would avoid putting objects the heap, as they will be cleaned out of memory sooner (i.e. don’t have to hang around for GC).

- Watch out for memory leaks. Easier said than done, but be careful especially around un-managed resources and things that implement IDisposable.

- Balance memory against any “IO Bound” optimizations you did - sometimes the “least worst” thing is to wait for the data layer to load you a record, rather than trying to keep the entire DB in memory.

- Memory use can become a bigger issue when you’re scaling horizontally on shared infrastructure where all instances are taking from a shared pool of memory. In these cases, where you need horizontal scaling for CPU performance, try sharding the data/traffic so that not all instances require all data.

Ultimately, except for very obvious issues you can see from a static analysis of the code or though step-by-step debugging, you're going to need to use performance profiling tools such as dotTrace and dotMemory to really pinpoint where the CPU time and Memory is going, make necessary adjustments and re-test your performance. You can also quickly test and compare performance of your code implementations using tools such as BenchmarkDotNet. I hope this list helps to give you some ideas!